- Rx Sensitivity (receiving sensitivity) Receiving

- sensitivity, which should be one of the most basic concepts, characterizes the minimum signal strength that the receiver can recognize without exceeding a certain bit error rate. The bit error rate mentioned here is a general term following the definition of the CS (Circuit Switching) era. In most cases, BER (bit error rate) or PER (packet error rate) will be used to examine sensitivity. In the LTE era, simply use throughput It is defined by the amount of Throughput – because LTE does not have a circuit-switched voice channel at all, but this is also a real evolution, because for the first time we no longer use such as 12.2kbps RMC (reference measurement channel, actually represents the rate of 12.2kbps Sensitivity is measured against “standardized alternatives” such as the speech codecs in the Internet of Things, but defined in terms of throughput that users can actually experience.

- SNR (signal-to-noise ratio)

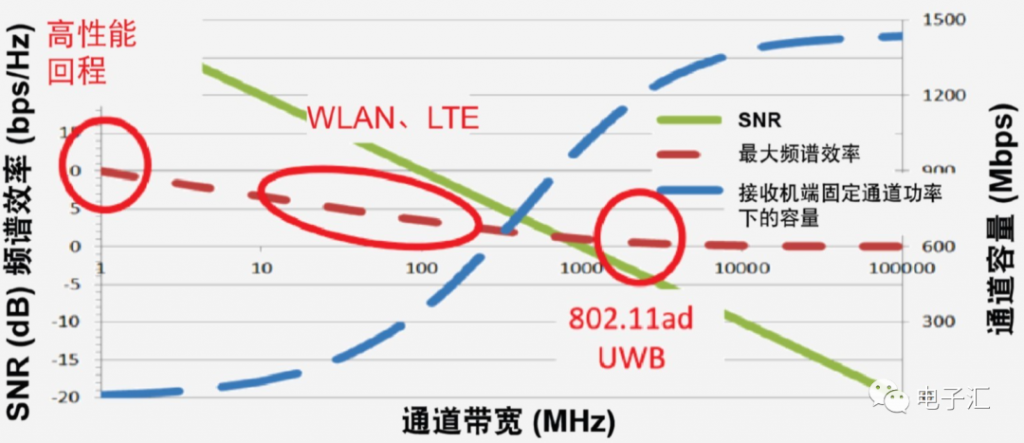

- When we talk about sensitivity, we often refer to SNR (signal-to-noise ratio, we generally talk about the demodulation signal-to-noise ratio of the receiver). We define the demodulation signal-to-noise ratio as the demodulator can The signal-to-noise ratio threshold of demodulation (someone will often ask you questions during the interview, give you a string of NF, Gain, and then tell you the demodulation threshold and ask you to push the sensitivity). So where do S and N come from?

- The S is the signal Signal, or useful signal; N is the noise Noise, which generally refers to all signals without useful information. The useful signal is generally emitted by the transmitter of the communication system, and the sources of noise are very extensive. The most typical one is the famous -174dBm/Hz – the natural noise floor. Remember that it is a quantity that has nothing to do with the type of communication system , in a sense it is calculated from thermodynamics (so it is related to temperature); another thing to note is that it is actually a noise power density (so it has the dimension of dBm/Hz), how much bandwidth we receive signals , how much bandwidth noise will be accepted – so the final noise power is obtained by integrating the noise power density over the bandwidth.

- TxPower (transmission power)

- The importance of transmit power is that the signal from the transmitter needs to go through space fading before reaching the receiver, so a higher transmit power means a longer communication distance. the So should we pay attention to SNR in our transmission signal? For example, if the SNR of our transmitted signal is poor, is the SNR of the signal arriving at the receiver also poor? the This involves the concept just mentioned, the natural noise floor. We assume that space fading has the same effect on both signal and noise (in fact, it is not, the signal can resist fading through encoding but the noise cannot) and it works like an attenuator, then we assume that the space fading is -200dB, and the bandwidth of the transmitted signal is 1Hz , power 50dBm, signal-to-noise ratio 50dB, what is the SNR of the signal received by the receiver? the The power of the signal received by the receiver is 50-200=-150Bm (bandwidth 1Hz), and the noise of the transmitter 50-50=0dBm fades through space, and the power reaching the receiver is 0-200=-200dBm (bandwidth 1Hz)? At this time, this part of the noise has already been “submerged” under the natural noise floor of -174dBm/Hz. At this time, we only need to consider the “basic component” of -174dBm/Hz to calculate the noise at the entrance of the receiver. the

This is true in most cases of communication systems. - ACLR/ACPR

- We put these items together because they represent part of the “transmitter noise”, but these noises are not in the transmission channel, but the part of the transmitter that leaks into the adjacent channel, which can be collectively referred to as “Adjacent Channel Leakage”.

- Among them, ACLR and ACPR (actually the same thing, but one is called in the terminal test, and the other is called in the base station test), both are named after “Adjacent Channel”. interference from other devices. And they have one thing in common, the power calculation of the interference signal is also based on a channel bandwidth. This measurement method shows that the design purpose of this indicator is to consider the signal leaked by the transmitter and interfere with the receiver of the equipment of the same or similar standard – the interference signal falls into the receiver band in the same frequency and bandwidth mode, Form the same frequency interference to the signal received by the receiver. the In LTE, there are two settings for ACLR testing, EUTRA and UTRA. The former describes the interference of the LTE system to the LTE system, and the latter considers the interference of the LTE system to the UMTS system. So we can see that the measurement bandwidth of EUTRAACLR is the occupied bandwidth of LTE RB, and the measurement bandwidth of UTRA ACLR is the occupied bandwidth of UMTS signal (3.84MHz for FDD system, 1.28MHz for TDD system). In other words, ACLR/ACPR describes a kind of “peer-to-peer” interference: the interference of the same or similar communication system by the leakage of the transmitted signal. the This definition has very important practical significance. In the actual network, signals from adjacent cells of the same cell and nearby cells often leak. Therefore, the process of network planning and network optimization is actually a process of maximizing capacity and minimizing interference. The adjacent cell leakage of the system itself is typical for adjacent cells. from the other direction of the system, the mobile phones of users in crowded crowds may also become a source of mutual interference. the Similarly, in the evolution of the communication system, the goal has always been to “smooth transition”, that is, to upgrade and transform the existing network into the next-generation network. Then the coexistence of two or even three generations of systems needs to consider the interference between different systems. The introduction of UTRA by LTE is to consider the radio frequency interference of LTE to the previous generation system in the case of coexistence with UMTS.

- Modulation Spectrum/

- Switching Spectrum Returning to the GSM system, Modulation Spectrum (modulation spectrum) and Switching Spectrum (switching spectrum, also known as switching spectrum, because of different translations for imported products) also play a similar role in adjacent channel leakage. The difference is that their measurement bandwidth is not the occupied bandwidth of the GSM signal. From the definition point of view, it can be considered that the modulation spectrum is to measure the interference between synchronous systems, and the switching spectrum is to measure the interference between non-synchronous systems (in fact, if the signal is not gated, the switching spectrum will definitely drown the modulation spectrum ). the This involves another concept: in the GSM system, the cells are not synchronized, although it uses TDMA; in contrast, TD-SCDMA and the subsequent TD-LTE, the cells are synchronized (The GPS antenna in the shape of a flying saucer or a ball is always a shackle that the TDD system cannot get rid of). the Because the cells are not synchronized, the power leakage of the rising edge/falling edge of the A cell may fall into the payload part of the B cell, so we use the switching spectrum to measure the interference of the transmitter to the adjacent channel in this state; while in the entire 577us GSM In the timeslot, the proportion of rising edge/falling edge is very small after all. Most of the time, the payload parts of two adjacent cells will overlap in time. In this case, the interference of the transmitter to the adjacent channel can be evaluated by referring to the modulation spectrum.

- SEM (Spectrum Emission Mask)

- When talking about SEM, we must first note that it is an “in-band indicator”, which is distinguished from spurious emission. The latter includes SEM in a broad sense, but the focus is actually on the spectrum leakage outside the operating frequency band of the transmitter. , and its introduction is more from the perspective of EMC (electromagnetic compatibility). the SEM provides a “spectrum template”, and then when measuring the in-band spectrum leakage of the transmitter, check whether there are any points exceeding the limit of the template. It can be said that it is related to ACLR, but it is not the same: ACLR considers the average power leaked into the adjacent channel, so it uses the channel bandwidth as the measurement bandwidth, which reflects the “noise floor” of the transmitter in the adjacent channel; What SEM reflects is to capture the exceeding standard point in the adjacent frequency band with a small measurement bandwidth (often 100kHz to 1MHz), which reflects “spurious emission based on the noise floor”. the If you scan the SEM with a spectrum analyzer, you can see that the spurious points on the adjacent channels are generally higher than the average ACLR, so if the ACLR index itself has no margin, the SEM will easily exceed the standard. Conversely, SEM exceeding the standard does not necessarily mean that the ACLR is bad. A common phenomenon is that LO spurs or a certain clock and LO modulation component (often with a narrow bandwidth, similar to point frequency) are serially inserted into the transmitter link. At this time, even if ACLR is fine, SEM may also be over the mark.

- EVM (Error Vector)

- First of all, EVM is a vector value, that is to say, it has amplitude and angle. It measures the “error between the actual signal and the ideal signal”. This measure can effectively express the “quality” of the transmitted signal – the point distance of the actual signal The farther the ideal signal is, the greater the error and the greater the modulus of EVM. the In (1), we have explained why the signal-to-noise ratio of the transmitted signal is not so important. There are two reasons: the first is that the SNR of the transmitted signal is often much higher than the SNR required for receiver demodulation; the second is that we calculate The receiver sensitivity refers to the worst situation of the receiver, that is, after a large spatial fading, the transmitter noise has already been submerged under the natural noise floor, and the useful signal is also attenuated to near the demodulation threshold of the receiver. the However, the “inherent signal-to-noise ratio” of the transmitter needs to be considered in some cases, such as short-range wireless communication, typically 802.11 series. the When the 802.11 series evolved to 802.11ac, 256QAM modulation has been introduced. For the receiver, even if the spatial fading is not considered, a high signal-to-noise ratio is required just to demodulate such a high-order quadrature modulation signal. The worse the EVM, the worse the SNR and the more difficult it is to demodulate. the Engineers working on 802.11 systems often use EVM to measure Tx linearity; while engineers working on 3GPP systems like to use ACLR/ACPR/Spectrum to measure Tx linearity performance. the From the origin, 3GPP is the evolution path of cellular communication. From the very beginning, it has to pay attention to the interference of adjacent channel and alternative channel (adjacent channel, alternative channel). In other words, interference is the number one obstacle affecting the rate of cellular communication, so 3GPP always aims at “minimizing interference” in the evolution process: frequency hopping in the GSM era, spread spectrum in the UMTS era, LTE era This is true for the introduction of the concept of RB. the The 802.11 system is the evolution of fixed wireless access. It is based on the spirit of the TCP/IP protocol, with the goal of “serving the best possible service”. In 802.11, time division or frequency hopping is often used to achieve multi-user coexistence. The network layout is more flexible (after all, it is mainly a local area network), and the channel width is also flexible and variable. In general it is not sensitive (or rather tolerant) to interference. the In layman’s terms, the origin of cellular communication is to make phone calls, and users who can’t get through the phone will go to the telecom bureau to make trouble; the origin of 802.11 is the local area network, and the network is not good. error correction and retransmission). the This determines that the 3GPP series must use the “spectrum regeneration” performance such as ACLR/ACPR as the index, while the 802.11 series can adapt to the network environment by sacrificing the speed. Specifically, “sacrificing the speed to adapt to the network environment” refers to the use of different modulation orders in the 802.11 series to deal with propagation conditions: when the receiver finds that the signal is poor, it immediately notifies the opposite transmitter to reduce the modulation order, and vice versa The same is true. As mentioned earlier, in the 802.11 system, the SNR has a great correlation with the EVM, and the reduction of the EVM can improve the SNR to a large extent. In this way, we have two ways to improve the receiving performance: one is to reduce the modulation order, thereby reducing the demodulation threshold; the other is to reduce the EVM of the transmitter, so that the signal SNR is improved. the Because EVM is closely related to the demodulation effect of the receiver, EVM is used to measure the performance of the transmitter in the 802.11 system (similarly, in the cellular system defined by 3GPP, ACPR/ACLR is an indicator that mainly affects network performance); The deterioration of EVM is mainly caused by nonlinearity (such as AM-AM distortion of PA), so EVM is usually used as a sign to measure the linear performance of the transmitter.

- 7.1, the relationship between EVM and ACPR/ACLR

- It is difficult to define the quantitative relationship between EVM and ACPR/ACLR. From the perspective of the nonlinearity of the amplifier, EVM and ACPR/ACLR should be positively correlated: the AM-AM and AM-PM distortion of the amplifier will enlarge the EVM, and also the ACPR/ACLR main source. the However, EVM and ACPR/ACLR are not always positively correlated. We can find a typical example here: Clipping, which is commonly used in digital intermediate frequency, that is, peak clipping. Clipping is to reduce the peak-to-average ratio (PAR) of the transmitted signal. The reduction of peak power helps to reduce the ACPR/ACLR after passing through the PA; but Clipping will also damage EVM, because whether it is clipping (windowing) or using a filter method, All will damage the signal waveform, thus increasing EVM.

- 7.2. The origin of PAR

- PAR (signal peak-to-average ratio) is usually expressed by a statistical function such as CCDF, and its curve represents the power (amplitude) value of the signal and its corresponding probability of occurrence. For example, if the average power of a certain signal is 10dBm, and the statistical probability of its power exceeding 15dBm is 0.01%, we can consider its PAR to be 5dB. the PAR is an important factor affecting transmitter spectrum regeneration (such as ACLP/ACPR/Modulation Spectrum) in modern communication systems. Peak power will push the amplifier into the non-linear region causing distortion, and the higher the peak power, the stronger the non-linearity. the In the GSM era, because of the balanced envelope characteristic of GMSK modulation, PAR=0, we often push it to P1dB when designing the GSM power amplifier to get the maximum efficiency. After the introduction of EDGE, 8PSK modulation is no longer a balanced envelope, so we often push the average output power of the power amplifier to about 3dB below P1dB, because the PAR of 8PSK signal is 3.21dB. the In the UMTS era, regardless of WCDMA or CDMA, the peak-to-average ratio is much larger than that of EDGE. The reason is the correlation of signals in the code division multiple access system: when the signals of multiple code channels are superimposed in the time domain, the phase may be the same, and the power will peak at this time. the The peak-to-average ratio of LTE is derived from the burstiness of RB. OFDM modulation is based on the principle of dividing multi-user/multi-service data into blocks in both the time domain and the frequency domain, so that high power may appear in a certain “time block”. SC-FDMA is used for LTE uplink transmission. First, DFT is used to expand the time domain signal to the frequency domain, which is equivalent to “smoothing” the burstiness in the time domain, thereby reducing the PAR.

- Summary of interference indicators

- The “interference index” here refers to the sensitivity test under various interferences in addition to the static sensitivity of the receiver. It is actually interesting to study the origin of these test items. the Our common interference indicators include Blocking, Desense, Channel Selectivity, etc.

- 8.1, Blocking (blocking)

- Blocking is actually a very old RF indicator that dates back to the early days of radar. The principle is to inject a large signal into the receiver (usually the first-stage LNA is the worst sufferer), so that the amplifier enters the nonlinear region or even saturates. At this time, on the one hand, the gain of the amplifier suddenly becomes smaller, and on the other hand, it produces extremely strong nonlinearity, so the amplification function of the useful signal cannot work normally. the Another possible blocking is actually done by the AGC of the receiver: a large signal enters the receiver chain, and the receiver AGC generates an action to reduce the gain to ensure the dynamic range; but at the same time, the useful signal level entering the receiver is very low , the gain is insufficient at this time, and the useful signal amplitude entering the demodulator is insufficient. the Blocking indicators are divided into in-band and out-of-band, mainly because the RF front-end generally has a frequency band filter, which can inhibit out-of-band blocking. However, regardless of whether it is in-band or out-of-band, the blocking signal is generally a point frequency without modulation. In fact, point-frequency signals without modulation are rare in the real world. In engineering, it is only simplified to point-frequency to (approximately) replace various narrow-band interference signals. the For solving Blocking, it is mainly RF contribution. To put it bluntly, it is to improve the IIP3 of the receiver and expand the dynamic range. For out-of-band Blocking, the suppression degree of the filter is also very important.

- 8.2、AM Suppression

- AM Suppression is a unique indicator of the GSM system. From the description, the interference signal is a TDMA signal similar to the GSM signal, which is synchronized with the useful signal and has a fixed delay. the This scenario is to simulate the signals of adjacent cells in the GSM system. From the perspective that the frequency offset of the interference signal is required to be greater than 6MHz (GSM bandwidth is 200kHz), this is a typical adjacent cell signal configuration. So we can think that AM Suppression is a reflection of the receiver’s interference tolerance to neighboring cells in the actual work of the GSM system. the

- 8.2、Adjacent (Alternative) Channel Suppression (Selectivity)

- Here we collectively refer to “adjacent channel selectivity”. In the cellular system, in addition to the same frequency cell, we also need to consider adjacent frequency cells. The reason can be found in the transmitter index ACLR/ACPR/Modulation Spectrum we discussed before: because the spectrum regeneration of the transmitter There will be a strong signal falling into the adjacent frequency (generally speaking, the farther the frequency offset is, the lower the level is, so the adjacent channel is generally the most affected), and this kind of spectrum regeneration is actually related to the transmitted signal That is, the receiver of the same standard may mistake this part of the regenerated spectrum as a useful signal and demodulate it, so-called magpie’s nest. the For example: if two adjacent cells A and B happen to be adjacent frequency cells (such a networking method is generally avoided, here is only an extreme scenario), when a terminal registered to A cell travels to two At the junction of campuses, but the signal strength of the two cells has not yet reached the handover threshold, so the terminal still maintains the connection to cell A; the ACPR of the base station transmitter in cell B is relatively high, so there is a high ACPR component in cell B in the receiving frequency band of the terminal. The useful signal of cell A overlaps in frequency; because the terminal is far away from the base station of cell A at this time, the strength of the useful signal received by cell A is also very low. At this time, when the ACPR component of cell B enters the terminal receiver, it can The original useful signal causes co-channel interference. the If we pay attention to the frequency offset definition of adjacent channel selectivity, we will find the difference between Adjacent and Alternative, corresponding to the first adjacent channel and the second adjacent channel of ACLR/ACPR. It can be seen that “transmitter spectrum leakage (regeneration)” in the communication protocol It is actually defined as a pair with “receiver adjacent channel selectivity”.

- 8.3、Co-Channel Suppression (Selectivity)

- This description refers to absolute same-frequency interference, and generally refers to an interference pattern between two same-frequency cells. the According to the networking principle we described before, the distance between two cells with the same frequency should be as far as possible, but no matter how far away, there will be signals leaking from each other, and the difference is only the strength. For the terminal, the signals of the two campuses can be regarded as “correct and useful signals” (of course, there is a set of access specifications on the protocol layer to prevent such wrong access), and it is measured whether the terminal’s receiver can avoid “the west wind overwhelms the east wind” “, depends on its same-frequency selectivity.

- 8.4 Summary

- Blocking is “big signal interferes with small signal”, and RF still has room to maneuver; while the above indicators such as AM Suppression, Adjacent (Co/Alternative) Channel Suppression (Selectivity) are “small signal interferes with large signal”, the meaning of pure RF work Not much, it still depends on the physical layer algorithm. the Single-tone Desense is a unique indicator of the CDMA system. It has a characteristic: the single-tone as the interference signal is an in-band signal, and it is very close to the useful signal. In this way, it is possible to generate two kinds of signals falling into the receiving frequency domain: the first one is due to the near-end phase noise of the LO, the baseband signal formed by mixing the LO and the useful signal, and the signal formed by mixing the LO phase noise and the interference signal. Both will fall within the range of the baseband filter of the receiver, the former is a useful signal and the latter is interference; the second is due to the nonlinearity in the receiver system, a useful signal (with a certain bandwidth, such as a 1.2288MHz CDMA signal) It may intermodulate with the interference signal on the nonlinear device, and the intermodulation product may also fall within the receiving frequency domain and become interference. the The origin of Single-tone desense is that when North America launched the CDMA system, it used the same frequency band as the original analog communication system AMPS, and the two networks coexisted for a long time. As a latecomer, the CDMA system must consider the interference of the AMPS system to itself. the At this point, I think of the PHS, which was called “if you don’t move, you won’t get through”. Because it has occupied the frequency of 1900~1920MHz for a long time, the implementation of TD-SCDMA/TD-LTE B39 in China has always been in the low range of B39, 1880~ 1900MHz, until PHS withdraws from the network. the The explanation of Blocking in textbooks is relatively simple: large signals entering the receiver amplifier make the amplifier enter the nonlinear region, and the actual gain becomes smaller (for useful signals). the But this makes it hard to explain two scenarios: Scenario 1: The linear gain of the pre-stage LNA is 18dB. When a large signal is injected to make it reach P1dB, the gain is 17dB; if no other effects are introduced (default LNA NF, etc. have not changed), then the noise figure of the entire system In fact, the impact is very limited. It is nothing more than that the denominator of the latter stage NF becomes smaller when it is included in the total NF, which has little effect on the sensitivity of the entire system. the Scenario 2: The IIP3 of the pre-stage LNA is very high, so it is not affected, but the second-stage gain block is affected (the interference signal makes it reach P1dB). In this case, the impact of the NF of the entire system is even smaller. the I’m here to throw a brick and put forward a point of view: the impact of Blocking may be divided into two parts, one part is that the Gain mentioned in the textbook is compressed, and the other part is actually that after the amplifier enters the nonlinear region, the useful signal is distorted in this region. This distortion may include two parts, one part is the spectrum regeneration (harmonic component) of the useful signal caused by the pure amplifier nonlinearity, and the other part is the Cross Modulation of the small signal modulated by the large signal. (understandable) Therefore, we also propose another idea: if we want to simplify the Blocking test (3GPP requires frequency scanning, which is very time-consuming), we may select some frequency points, which have the greatest impact on the distortion of the useful signal when the Blocking signal appears. the From an intuitive point of view, these frequency points may include: f0/N and f0*N (f0 is the useful signal frequency, and N is a natural number). The former is because the Nth harmonic component generated by the large signal itself in the nonlinear region just superimposes on the useful signal frequency f0 to form direct interference, and the latter is superimposed on the Nth harmonic of the useful signal f0 and then affects the output signal f0 Time-domain waveform – explain: According to Parseval’s law, the waveform of the time-domain signal is actually the sum of the fundamental frequency signal and each harmonic in the frequency domain. When the power of the N-th harmonic in the frequency domain changes, the The corresponding change in the domain is the envelope change of the time domain signal (distortion occurs). the

- Dynamic range, temperature compensation and power control

- Dynamic range, temperature compensation, and power control are mostly “invisible” metrics that only show their impact when some extreme tests are performed, but in themselves they represent the most delicate parts of RF design .

- 9.1. Transmitter dynamic range

- The dynamic range of the transmitter is characterized by the maximum transmission power and the minimum transmission power of the transmitter “without damaging other transmission indicators”. the “No damage to other transmission indicators” seems very broad. If you look at the main impact, it can be understood as: the linearity of the transmitter is not damaged at the maximum transmission power, and the signal-to-noise ratio of the output signal is maintained at the minimum transmission power. the At the maximum transmission power, the output of the transmitter tends to approach the nonlinear region of all levels of active devices (especially the final amplifier), and the non-linear performances that often occur include spectrum leakage and regeneration (ACLR/ACPR/SEM), modulation error ( PhaseError/EVM). At this time, the most suffering is basically the linearity of the transmitter, and this part should be easier to understand. the Under the minimum transmission power, the useful signal output by the transmitter is close to the noise floor of the transmitter, and even has the danger of being “submerged” in the noise of the transmitter. What needs to be guaranteed at this time is the signal-to-noise ratio (SNR) of the output signal, in other words, the lower the noise floor of the transmitter at the minimum transmit power, the better. the There was an incident in the laboratory: when an engineer was testing the ACLR, he found that the ACLR was worse when the power was reduced (the normal understanding is that the ACLR should be improved as the output power is reduced), and the first reaction at that time was that there was something wrong with the instrument. But the test result is still the same for another instrument. The guidance we give is to test the EVM at low output power, and find that the EVM performance is very poor; we judge that the noise floor at the entrance of the RF link may be very high, and the corresponding SNR is obviously very poor, and the main component of ACLR is no longer The spectral regeneration of the amplifier, but the baseband noise is amplified through the amplifier chain.

- 9.2. Receiver dynamic range

- The dynamic range of the receiver is actually related to the two indicators we mentioned before. The first is the reference sensitivity, and the second is the receiver IIP3 (mentioned many times when talking about interference indicators). the The reference sensitivity actually characterizes the minimum signal strength that the receiver can recognize, so I won’t go into details here. We mainly talk about the maximum receiving level of the receiver. The maximum reception level refers to the maximum signal that the receiver can receive without distortion. This distortion can occur at any stage of the receiver, from the pre-stage LNA to the receiver ADC. For the front-stage LNA, the only thing we can do is to increase the IIP3 as much as possible so that it can withstand higher input power; for the subsequent stage-by-stage devices, the receiver uses AGC (automatic gain control) to ensure that the useful signal falls on the device. Enter within the dynamic range. Simply put, there is a negative feedback loop: detect received signal strength (too low/too high) – adjust amplifier gain (turn up/down) – amplifier output signal to ensure that it falls within the input dynamic range of the next-stage device. the Here we talk about an exception: the front-end LNA of most mobile phone receivers has an AGC function. If you study their datasheets carefully, you will find that the front-end LNA provides several variable gain sections, and each gain section has its corresponding Generally speaking, the higher the gain, the lower the noise figure. This is a simplified design, and its design idea is that the goal of the receiver RF chain is to keep the useful signal input to the receiver ADC within the dynamic range, and keep the SNR above the demodulation threshold (the SNR is not critical Higher is better, but “enough is enough”, which is a smart thing to do). Therefore, when the input signal is large, the pre-stage LNA reduces the gain, loses NF, and increases IIP3 at the same time; when the input signal is small, the pre-stage LNA increases the gain, reduces NF, and reduces IIP3 at the same time.

- 9.3. Temperature compensation

- Generally speaking, we only do temperature compensation on the transmitter. the Of course, the performance of the receiver is also affected by temperature: at high temperatures, the link gain of the receiver decreases, and the NF increases; at low temperatures, the link gain of the receiver increases, and the NF decreases. However, due to the small signal characteristics of the receiver, both the gain and the influence of NF are within the system redundancy range. the The temperature compensation of the transmitter can also be subdivided into two parts: one part is the compensation for the accuracy of the transmitted signal power, and the other part is the compensation for the change of the transmitter gain with temperature. the Transmitters in modern communication systems generally perform closed-loop power control (except for the slightly “old” GSM system and Bluetooth system), so the power accuracy of a transmitter calibrated by production procedures actually depends on the accuracy of the power control loop. Generally speaking, the power control loop is a small-signal loop with high temperature stability, so the demand for temperature compensation is not high, unless there are temperature-sensitive devices (such as amplifiers) on the power control loop. the Temperature compensation of transmitter gain is more common. This kind of temperature compensation has two common purposes: one is “visible”, usually for systems without closed-loop power control (such as the aforementioned GSM and Bluetooth), such systems usually do not require high output power accuracy, Therefore, the system can use the temperature compensation curve (function) to keep the RF link gain within a range, so that when the baseband IQ power is fixed and the temperature changes, the system output RF power can also be kept within a certain range; One is “invisible”, usually in a system with closed-loop power control, although the RF output power of the antenna port is precisely controlled by the closed-loop power control, but we need to keep the DAC output signal within a certain range (the most common An example is the need for digital predistortion (DPD) of the base station transmission system), then we need to control the gain of the entire RF link to a certain value more precisely – the purpose of temperature compensation is here. the The means of transmitter temperature compensation generally include variable attenuators or variable amplifiers: in the case of low precision in the early stage and low cost precision requirements, temperature compensation attenuators are more common; in the case of higher precision requirements, the solution Generally: temperature sensor + digitally controlled attenuator/amplifier + production calibration.

- 9.4 Transmitter power control

- After talking about dynamic range and temperature compensation, let’s talk about a related and very important concept: power control. the Transmitter power control is a necessary function in most communication systems, such as ILPC, OLPC, and CLPC, which are common in 3GPP, must be tested in RF design, often have problems, and the reasons are very complicated. Let’s first talk about the meaning of transmitter power control. the All transmitter power control purposes include two points: power consumption control and interference suppression. the Let’s talk about power consumption control first: In mobile communication, in view of the distance between the two ends and the level of interference, the transmitter only needs to maintain a signal strength that is “enough for the other party’s receiver to demodulate accurately”; If it is low, the communication quality will be damaged, and if it is too high, the empty power consumption will be meaningless. This is especially true for battery-powered terminals such as mobile phones, where every milliamp of current needs to be measured in pennies. the Interference suppression is a more advanced requirement. In a CDMA system, since different users share the same carrier frequency (distinguished by orthogonal user codes), in the signal arriving at the receiver, the signal of any user is covered on the same frequency for other users If the signal power of each user is high or low, then the high-power user will overwhelm the signal of the low-power user; therefore, the CDMA system adopts a power control method. For the power of different users arriving at the receiver (we call it is the air interface power, referred to as the air interface power), and sends a power control command to each terminal, and finally makes the air interface power of each user the same. This kind of power control has two characteristics: the first is that the power control accuracy is very high (the interference tolerance is very low), and the second is that the power control cycle is very short (the channel may change quickly). the In the LTE system, uplink power control also has the function of interference suppression. Because LTE uplink uses SC-FDMA, multiple users also share the carrier frequency and interfere with each other, so the same air interface power is also necessary. the The GSM system also has power control. In GSM, we use “power level” to represent the power control step size, and each level is 1dB. It can be seen that the power control of GSM is relatively rough. the interference limited system Here is a related concept: interference limited system. CDMA system is a typical interference limited system. Theoretically speaking, if each user code is completely orthogonal and can be completely distinguished by interleaving and deinterleaving, then the capacity of the CDMA system can actually be unlimited, because it can completely use a frequency resource on a limited frequency resource. Layer-by-layer expanded user codes distinguish infinitely many users. But in fact, since the user codes cannot be completely orthogonal, noise is inevitably introduced during multi-user signal demodulation. The more users there are, the higher the noise will be, until the noise exceeds the demodulation threshold. the In other words, the capacity of a CDMA system is limited by interference (noise). the The GSM system is not an interference-limited system, it is a time-domain and frequency-domain limited system, its capacity is limited by frequency (200kHz a carrier frequency) and time domain resources (8 TDMA can be shared on each carrier frequency user). Therefore, the power control requirements of the GSM system are not high (the step size is rough and the cycle is long).

- 9.5 Transmitter Power Control and Transmitter RF Index

- After talking about the power control of the transmitter, let’s discuss the factors that may affect the power control of the transmitter in the RF design (I believe that many colleagues have encountered the depressing scene that the closed-loop power control test failed). the For RF, if the design of the power detection (feedback) loop is correct, then there is not much we can do for the closed-loop power control of the transmitter (most of the work is done by the physical layer protocol algorithm), the most important is the transmitter in-band flatness. the Because transmitter calibration is actually only performed on a limited number of frequency points, especially in production testing, the fewer frequency points to do, the better. However, in actual working scenarios, it is entirely possible for the transmitter to work on any carrier in the frequency band. In a typical production calibration, we will calibrate the high, medium and low frequency points of the transmitter, which means that the transmit power of the high, medium and low frequency points is accurate, so the closed-loop power control is also correct at the calibrated frequency points. However, if the transmit power of the transmitter is not flat in the entire frequency band, the transmit power of some frequency points deviates greatly from the calibration frequency point, so the closed-loop power control with the calibration frequency point as a reference will also occur relatively large at these frequency points Mistakes or even errors.